Apache Spark RDD groupBy transformation

In our previous posts we talked about the groupByKey , map and flatMap functions. In this post we will learn RDD’s groupBy transformation in Apache Spark. As per Apache Spark documentation, groupBy returns an RDD of grouped items where each group consists of a key and a sequence of elements in a CompactBuffer.

This operation may be very expensive. If you are grouping in order to perform an aggregation (such as a sum or average) over each key, using

aggregateByKeyorreduceByKeywill provide much better performance.

Let’s start with a simple example. We have an RDD containing words as shown below.

// RDD containing some words. val wordsRDD = sparkContext.parallelize(Array( "Tom", "Lenevo", "Anvisha", "John", "Jimmy", "Jacky", "John", "Jimmy", "Jimmy" )) // Let's print some data. wordsRDD.collect.foreach(println) // Output Tom Anvisha Lenevo John ...

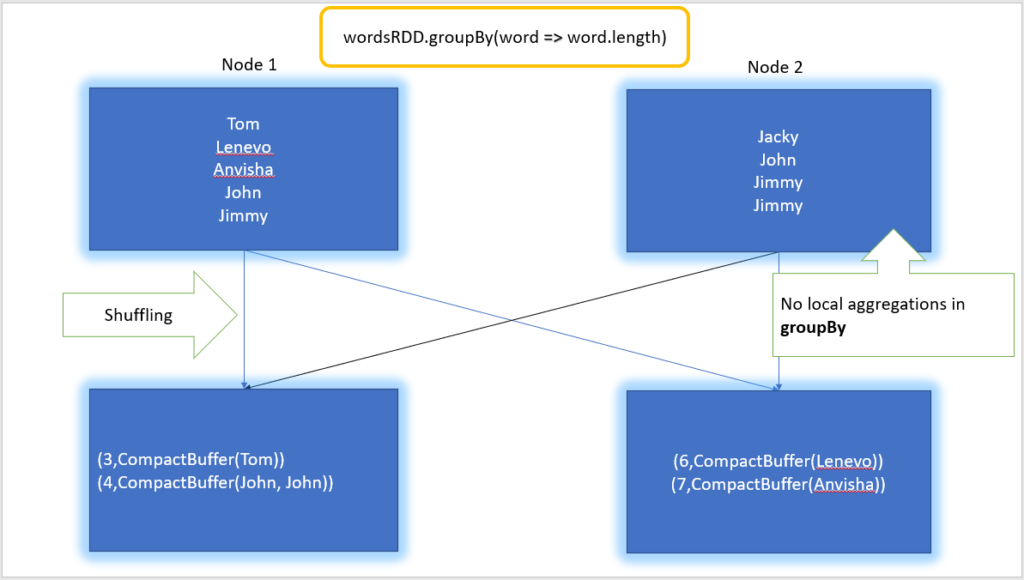

We will group the words based on their length. That means all words with same length must be in the same group.

// Using groupBy transformation to group elements based on their length. val groupRDD = wordsRDD.groupBy(word => word.length) // Let's print some data. groupRDD.collect.foreach(println) // Output (3,CompactBuffer(Tom)) (4,CompactBuffer(John, John)) (5,CompactBuffer(Jimmy, Jacky, Jimmy, Jimmy)) (6,CompactBuffer(Lenevo)) (7,CompactBuffer(Anvisha))

In the above example we can see that all words with the same length are in the same group.

Let’s take another example. This time we will use groupBy to group all the words which are starting with the same character. The code snippet is shown below

// Using groupBy transformation to group elements based on their first character. val groupedRDDNew = wordsRDD.groupBy(word => word.charAt(0)) // Let's print some data. groupedRDDNew.collect.foreach(println) // Output (A,CompactBuffer(Anvisha)) (J,CompactBuffer(John, Jimmy, Jacky, John, Jimmy, Jimmy)) (T,CompactBuffer(Tom)) (L,CompactBuffer(Lenevo))

As shown in the above example, the words starting with the same character are in the same group.

groupBy transformation complete Example

The complete example is also present in our Gihub repository

import org.apache.spark.sql.SparkSession

object GroupByExample extends App {

// Creating a SparkContext object.

val sparkContext = SparkSession.builder()

.master("local[*]")

.appName("Proedu.co examples")

.getOrCreate()

.sparkContext

val wordsRDD = sparkContext.parallelize(Array(

"Tom", "Lenevo", "Anvisha",

"John", "Jimmy", "Jacky",

"John", "Jimmy", "Jimmy"

))

// Group elements based on their length.

val groupRDD = wordsRDD.groupBy(word => word.length)

// Let's print some data.

groupRDD.collect.foreach(println)

/** Output

* (3,CompactBuffer(Tom))

* (4,CompactBuffer(John, John))

* (5,CompactBuffer(Jimmy, Jacky, Jimmy, Jimmy))

* (6,CompactBuffer(Lenevo))

* (7,CompactBuffer(Anvisha))

*/

// Group elements based on their first character.

val groupedRDDNew = wordsRDD.groupBy(word => word.charAt(0))

// Let's print some data.

groupedRDDNew.collect.foreach(println)

/** Output

* (A,CompactBuffer(Anvisha))

* (J,CompactBuffer(John, Jimmy, Jacky, John, Jimmy, Jimmy))

* (T,CompactBuffer(Tom))

* (L,CompactBuffer(Lenevo))

*/

}

Happy Learning 🙂